Shape Synthesis from Sketches via

Procedural Models and Convolutional NetworksHaibin Huang1, Evangelos Kalogerakis1, M. Ersin Yumer 2, Radomir Mech 2

IEEE Transactions on Visualization and Computer Graphics 2017

1University of Massachusetts Amherst

2Adobe Research

Abstract

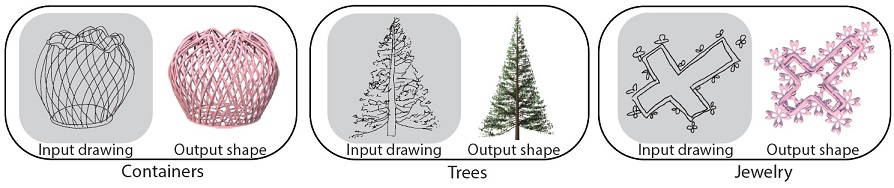

Procedural modeling techniques can produce high quality visual content through complex rule sets. However, controlling the outputs of these techniques for design purposes is often notoriously difficult for users due to the large number of parameters involved in these rule sets and also their non-linear relationship to the resulting content. To circumvent this problem, we present a sketch-based approach to procedural modeling. Given an approximate and abstract hand-drawn 2D sketch provided by a user, our algorithm automatically computes a set of procedural model parameters, which in turn yield multiple, detailed output shapes that resemble the user's input sketch. The user can then select an output shape, or further modify the sketch to explore alternative ones. At the heart of our approach is a deep Convolutional Neural Network (CNN) that is trained to map sketches to procedural model parameters. The network is trained by large amounts of automatically generated synthetic line drawings. By using an intuitive medium i.e., freehand sketching as input, users are set free from manually adjusting procedural model parameters, yet they are still able to create high quality content. We demonstrate the accuracy and efficacy of our method in a variety of procedural modeling scenarios including design of man-made and organic shape.

Paper

[Bibtex]Supplementary Material

More results, 40M

Code

This archive contains code for training our CNN model that generates procedural model parameters from sketches with Caffe, and code for extracting CNN features from given sketches.

Presentation

Acknowledgments

Kalogerakis gratefully acknowledges support from NSF (CHS-1422441,CHS-1617333) and NVidia for GPU donations. We thank Daichi Ito for providing us with procedural models, and Olga Vesselova for proofreading. We finally thank the anonymous reviewers for their feedback.

Haibin Huang thanks Cosimo Wei and Yufei Wang for help and discussion on Caffe.